During my first month as a teacher, I attended a professional development session on Depth of Knowledge (DOK). During the training, we sat in a cold meeting room and looked at assessment items, evaluating them for their levels, 1, 2, 3, and 4. Then, we planned out how we would teach students to get to the level of thinking required by those level-4 questions. I remember sitting at my table thinking, “But I am a reading teacher! What do I do when the text itself is what is really hard for my kiddos?” For all of its strengths, DOK is a content-agnostic framework. For literacy this is tough because the text plays such an important role in the demand any reading question places on students.

Fast forward many (more than I care to admit) years, and a new framework, one that takes the demands of reading a text into account, is finally available. The Framework to Evaluate Cognitive Complexity in Reading Assessments is based on the expectations of Criterion B.4 of the CCSSO Criteria for Procuring and Evaluating High-Quality Assessments. This criterion requires that summative assessments ensure that items reflect the range of cognitive demand required by the standards. Typically, this has meant using Bloom’s or DOK to get a list of verbs or a range of 1-4s on a test. But the Criteria suggest another option, a content-specific framework, which, for reading, is outlined as:

- The complexity of the text on which an item is based;

- The range of textual evidence an item requires (how many parts of text[s] students must locate and use to respond to the item correctly);

- The level of inference required; and

- The mode of student response (e.g., selected-response, constructed-response)

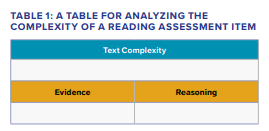

Achieve, a nonprofit dedicated to making sure every student graduates from high school ready to succeed in the college or career of his/her choice, took this guidance and used it as the basis for a new reading framework. To use the framework, assessment developers fill out a tool, rating each item on an assessment against three factors: Text Complexity (how complex the text the item asks about is), Evidence (how much of the text a student must use to respond to the item), and Reasoning (what kind of thinking is needed to respond to the item):

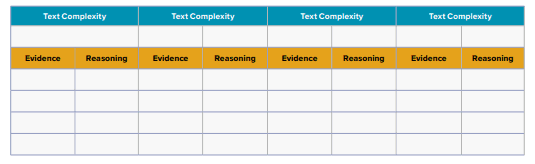

Then, developers look across the test form and ensure that there is not just a variety of one variable, but that there is a range of complexity within all variables:

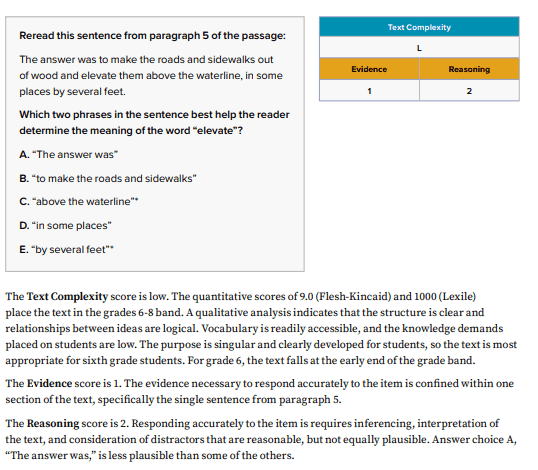

But, what does it actually look like? How do we evaluate a question for its Text Complexity, Evidence, and Reasoning? Check out this example below:

The idea behind this framework is that reading items derive their complexity from a variety of features, so when we evaluate the items, we should note that. Instead of looking for one numerical score, each item now gets rated for all of the variables that contribute to its complexity. Text is placed above evidence and reasoning to signal its importance: the research makes clear that the complexity of the text is the key factor impacting student performance on assessment items. As such, the tool associated with the framework emphasizes text complexity to ensure that its importance is noted.

Ultimately, the CCSSO criteria require summative assessments to reflect the complexity of college- and career-ready (CCR) standards. Summative assessments should also capture the range of complexity within the standards, and this framework allows assessment developers to do just that. Previous evaluation methods focused on applying somewhat arbitrary numerical scores, but with the Achieve tool, each item is rated against ALL of the features of literacy that make it complex. This way, assessment vendors can look across the form to ensure that there are items that reflect a range of complexity, as well as items that are complex for a variety of reasons.

Though this tool was developed with the expectations of summative assessments in mind, we all know that assessment tools often make their way to instruction and professional learning for teachers, just as Bloom’s and DOK did for me as an early teacher. As I have worked with the tool, I have found myself thinking back to that training I attended my first month of teaching. What would a training that focused on this new framework have signaled to me as a new educator? What would I have inferred was “important” for my students? As opposed to the content-agnostic framework I learned about back then, text complexity is front and center in this tool–not lists of verbs and thinking skills. And that would have changed my instructional focus and my impact on my students. Materials, assessment, professional learning, and classroom instruction are all part of a cohesive system that creates strong learning experiences–and when it comes to teaching reading, the texts we select as worthy of students’ time must always be front and center.

Bloom’s has never been just about the verbs. There are two dimensions to Bloom’s — the cognitive process and the content being worked with. Therefore, in its full application, it is well aligned with what you’re discussing here. With reading, the content is inseparable from the complexity of the text. So, I guess what I’m saying is that Bloom’s could still get you to where you’re going with this new framework. However, Bloom’s has been misunderstood and shallowly applied for decades, so I’m excited to see this framework that builds on its foundation.

DOK is not about the type of thinking as indicated by the verb. It considers what exactly students must learn and confirms how deeply students must understand and use their learning in a certain context. That’s all described by the words and phrases that follow the verb. For example:

DOK 1: Identify the literary elements of a text (e.g., the plot, the characters, the sequence of events). This learning intention demands students to recall information or “just the facts” to answer correctly.

DOK 2: Identify how literary devices are used by the text or author. If the student only had to identify what literary devices are or what literary devices are in the text, this would be a DOK 1. However, it demands students to use information and basic reasoning to establish and explain with examples how the author or text uses craft and structure. That makes it a DOK 2.

DOK 3: Identify the explicit and implicit themes in a text and how the author strengths and supports them. This is a DOK 3 because it demands students to use complex reasoning supported by evidence to examine and explain concrete and abstract ideas and how they are strengthened and supported.

DOK 4: Identify the explicit and implicit themes in a text addressing the same topic or written by the same author and how the text or author strengths and supports them. This is a DOK 4 because it demands students to use extended reasoning supported by expertise to explore and explain with examples and evidence. This will usually take an extended period of time. However, time is a characteristic, not a criterion.

As you can see, all four learning intentions expect students to identify. However, the DOK demanded and the DOK level depends on what exactly and how deeply students must identify. That’s described by the words and phrases that follow the verb.

As for text complexity, that’s a whole different issue than DOK. Students could be tasked to achieve or surpass these learning intention if they were assigned to read Green Eggs and Ham or Crime and Punishment. With DOK, it’s not the demand of the text but the task.

I hope this helps. My upcoming book from Solution Tree explains more about DOK. https://www.solutiontree.com/deconstructing-depth-of-knowledge.html