Why is it the case that some students’ test scores look so different across assessments of the same content? The short answer is that not all assessments assess the standards the same way.

Let’s consider a system where the alignment of a district’s curriculum materials to the standards is deemed “close enough,” the alignment of the district’s interim/benchmark program to the standards is deemed “close enough,” and the alignment of that state’s summative assessment to the standards is deemed “close enough.” But none of these components is perfectly aligned and each has slightly different alignment issues (for example, the curricular materials have one alignment issue and the state summative assessment, another). What you get is information about students that is very difficult to interpret without understanding the nature of those alignment issues with respect to the intended outcomes, that is, the standards. The example below shows how differently standard 5.NBT.B.61 is measured in the enVisionmath 2.0 textbook Topic Assessment versus the Smarter Balanced summative assessment.

Uncovering slight (or major) alignment issues is an important component in creating an aligned system where all pieces are working together to support student progress toward the goals outlined in college- and career-ready mathematics standards. The enVisionmath 2.0 materials adaptation project, facilitated by Student Achievement Partners and led by districts from across the country, allowed us to work collaboratively to identify places where the alignment of the program to the standards could be strengthened. One of the places we uncovered in the curriculum materials that required some adaptation to improve the overall alignment was in the textbook-embedded Topic Assessments. In looking at the Topic Assessments, it was not only important to consider each individual assessment question, but also how a group of questions works together to measure a particular standard. Looking at a question in isolation, we might get agreement that the question is “close enough,” but in looking at all of the questions for a particular standard, we sometimes found that, as a group, they missed the target. This leaves open the possibility for teachers to have an incomplete understanding of what their students know and can do in their pursuit of grade-level mathematics.

The careful design of college- and career-ready math standards requires that we shift to assessments that balance conceptual understanding, procedural skill and fluency, and application problems. While the standards are carefully worded to target these competencies, we found in our examination of the enVisionmath 2.0 Topic Assessments, that some standards that clearly targeted procedural skill and fluency measured almost fully by application questions. In other cases, we found that the Standard for Mathematical Practice #1, which requires students to make sense of problems and persevere in solving them, was being undermined by assessment problems that required students to deploy specific strategies embedded in the problems. The Topic Assessment Guidance in the enVisionmath 2.0 Guidance documents recommends actions teachers can take to improve the alignment of the assessments. Many times, modifications are suggested to remove a specific model or strategy in order to better assess students’ understanding of the mathematical concept, procedure, or application. A few annotated examples illustrative of the alignment issues found in the enVisionmath 2.0 Topic Assessments and materials are illustrated below.

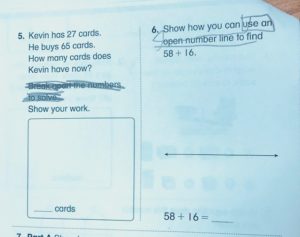

This example shows how one teacher used the guidance documents to modify one of the assessment questions, removing the direction for students to use a specific strategy.

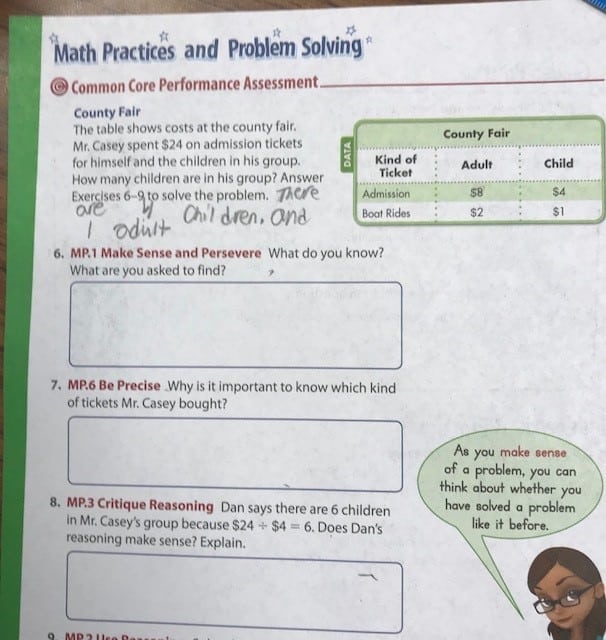

This example illustrates a student solving the problem on his own, but then being asked questions intended to scaffold the problem. Although they are aligned to Standards for Mathematical Practice (SMPs), the scaffolding moves the problem further from the intent of the SMPs, not closer.

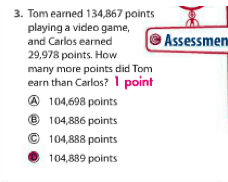

This problem is aligned to a procedural skill and fluency standard (4.NBT.B.4–Fluently add and subtract multi-digit whole numbers using the standard algorithm) but, because it is embedded in an application, we get unclear information about any potential sources of difficulty for a student. Additionally, multiple choice item formats are not well suited for measuring fluency. In this case, a student needs to only subtract the ones digits of the two numbers and can select the correct answer from that one computation.

This problem is aligned to a procedural skill and fluency standard (4.NBT.B.4–Fluently add and subtract multi-digit whole numbers using the standard algorithm) but, because it is embedded in an application, we get unclear information about any potential sources of difficulty for a student. Additionally, multiple choice item formats are not well suited for measuring fluency. In this case, a student needs to only subtract the ones digits of the two numbers and can select the correct answer from that one computation.

One important takeaway of this project is that we must be critical consumers of all of the resources we are using to build and measure students’ understanding of mathematics. Individual, seemingly small alignment issues can actually add up to wreak havoc on measuring the full intent of a particular standard. Moving forward, we must collectively demand transparency from creators of different assessments to ensure that all of the components of our system are working together to inform our understanding of what students know and can do in mathematics.

15.NBT.B.6–Find whole-number quotients of whole numbers with up to four-digit dividends and two-digit divisors, using strategies based on place value, the properties of operations, and/or the relationship between multiplication and division. Illustrate and explain the calculation using equations, rectangular arrays, and/or area models.

2Items retrieved from the Smarter Balanced Grade 5 Claim 1 Items Specifications. Available from http://www.smarterbalanced.org/assessments/; accessed May 2018.

You make excellent points. Thank you for your insight. Our district uses a program and I often cover the strategy they ask the children to use, so that I can better see how my young students will solve it on their own.